Mixed Reality / Augmented Reality

|

Overview

Most of the VR systems we have experienced in this decade have poor reality because

the environment was entirely synthesized within a computer. From this limitation,

people started to utilize rich information in the real world in the VR systems.

The technology that deals with the real physical world as well as the virtual

synthesized world bacame essential. Mixed Reality or MR is the technology that

realizes the environment which is the seamless integration of real and virtual

worlds.

Article

(If you want to see more information, click an image.)

| Title | Image | Source |

뇌속에 반도체칩 이식 현실같은 가상세계 체험 |

|

Chosun ilbo 2003. 5. 29 |

현실과 가상세계를 실시간 합성한 '환상의 세계' |

|

Chosun ilbo 2003. 1. 10 |

Project

| Title | Sponsor | Term |

| 실감형 학습 시스템 상용화를 위한 프로그램 테스트 및 패키징 | Electronics and Telecommunications Research Institute | 2009.5 ~ 2010.1 |

| 저가형 카메라용 객체 추적과 제스쳐 인식 기술 개발 | Electronics and Telecommunications Research Institute | 2008.3 ~ 2009.2 |

| KERIS증강현실 마커인식 기술개발 | CREDU | 2007.5 ~ 2007.12 |

| IBR기법을 이용한 3D모델러 개발 기술 자문 | NVL Soft. | 2007.5 ~ 2007.10 |

| 기하학 마커 및 혼합현실 Toolkit 상용화 테스트 | Electronics and Telecommunications Research Institute | 2006.4 ~ 2007.1 |

| Augmented Reality Technology | Korea Science and Enginnering Foundation | 2000.8 ~ 2003.2 |

| Systems for recognizing and synthesizing of facial expression and gesture | Ministry of Science and Technology | 1998.11 ~ 2000.11 |

| Research and Development of Computer's Kansei Interface | Ministry of Science and Technology | 1996.11 ~ 1998.10 |

Research

Augmented Reality

Human Image Recognition & Synthesis

3D Cyber Character Animation

| Title | Summary | Movie |

Oh!

My Baby |

Oh! My Baby is a full 3D real-time parenting simulation game, developed by Adamsoft Co., which allows you and your mate to create and raise a baby through varieties of events and games. The baby character will bare all the distinguished features of the parents. Your virtual baby will recognize your voice and depending on the way you rear the baby, the baby will develop its unique characteristic traits. | |

Puppeteer |

Puppeteer, a 3D cyber character animation authoring tool,

is developed with Adamsoft Co. as an application of the general human

head/face & body model generation tool. - Facial animation with simplified action unit - Real-time motion control - Smooth skinning - Real-time lip-sync - Non-linear character animation editing - Script-based digital video production |

|

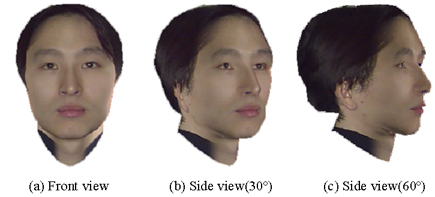

| General Human Head/Body Model Generation Tool |

This tool is concentrated on the method from which we can model a variety of human heads/bodies from a general model. We can edit low-level parameters in the human model and control high-level parameters to produce various human head/body models. Modeling Demo

|

|

Web-based Multi-user 3D Animation System

| Title | Summary | Movie |

AMINET |

This

system is realized as a distributed system via WWW. Our system is capable

of bringing together geographically dispersed users in a single virtual

world, and interacting both between multiple users and between users and

the elements of the virtual worlds. Also, the avatars that stand for users'

characteristics are implemented more realistically by using the high-quality

animations, for example face expression animation, gesture, and full body

animation. More information... |

Interactive Artificial Life in a Virtual Environment

| Title | Summary | Movie |

Chorongi |

A

cyber character is a kind of artificial life in a virtual environment. To

be a life form in the virtual world, cyber characters need sensors and control

systems. Current cyber characters lack somewhat direct, immersive interaction

capabilities, so we want to add interactiveness to the cyber characters by

adding smart and reactive action capabilities to perceive and

communicate with the real-world. System overview

|

Behavior-based Control System Animation Overview Gesture Recognition Demo Scene Video: 1,2,3 |

Gesture-based VR Interface

| Title | Summary | Movie |

Hand

Gesture Recognition System |

-

Hand feature extraction from one camera - VR navigation by recognizing hand gesture - Real-time physically based simulation - Car navigation simulation in virtual environment |

3D Scene Reconstruction

| Title | Summary | Movie |

3D Scene Reconstruction |

This system is aimed at human motion analysis, modeling, and animation, which includes the following research topics: camera calibration, dynamic image capturing, image-based 3D modeling, mixed reality technology, and human animation. |  |

Level-of-Details (LODs) Modeling of 3D Objects